In a world where buzzwords such as clicks, impressions, interactions and followers dominate what, when and how we behave online, finding authentic and non-sensationalised content across social media platforms has never been so difficult.

In hindsight, the uprise in dystopian tendencies across social media is somewhat intuitive, as with human existence being catapulted into the digital realm thanks to the Information Age, it was only a matter of time until our primitively bound egos caught up. Further, now we’re almost 20 years down the line from when Facebook first launched, the internet has evolved in a way which now sees our online conduct become just as important as what we do IRL (or if not more).

Although being ultra-bizarre in an objective sense – i.e. if you were to show the cavemen of 2000 AD what efforts and ‘pain’ some Instagram models go through in order to get a few more followers- this is the harsh reality (or dis-reality) in which we’ve cultivated ourselves through clickbait culture. Be it the fact that online ‘clout’ is now the most powerful currency in the world, or on a smaller scale, that traditional sports broadcasters have now replaced their ex-footballer punditry teams with ‘football YouTubers,’ it’s evident that our online worlds are now largely driven by the aforementioned buzzwords… i.e. clicks, impressions, interactions and followers.

And for anyone who’s ever watched an episode of Black Mirror, this fictitious online world in which we’ve been sleepwalking into may seem all so familiar… which again, is rather worrying given the eeriness of many of that show’s episodes. In addition, given that an estimated 4.9 billion of us use social media in some form (per Forbes) – with 1.628 being on Instagram and almost 3 billion being on Facebook (per Data Reportal) – it appears that the large majority of us will engage with clout-chasing chaos at some point in our digital lives.

AI in Small Scale Social Media

By virtue, technologies evolve in tandem with one another, meaning the introduction of Web3 and artificial intelligence (AI) onto social media proceedings was only a matter of time. For 2023, what’s of primary concern here is the benign introduction of NFTs and crypto, as well as the more malignant and wild-west addition of AI-generated text and images. This article will be focusing on the latter.

On the AI end of things, we can look at things through two perspectives of time – i.e. either its impact on today’s landscape, or how it may revolutionise the space in years to come.

When it comes to today, it’s pretty intuitive- that is, without much research- to see how AI can encourage even more distastefulness and inauthenticity across social media, as in essence, the tech allows people’s fake lives to become even faker. More specifically, the ‘generative’ prowess’s of many of today’s leading programs allow users to obtain and post content that isn’t necessarily ‘them’ or ‘theirs’. For example, this could entail the unrealistic use of AI-generated (or edited) images of themselves, or the posting of a passage of text which comes straight from the mouth of ChatGPT.

Although it would be wrong to tarnish every social media user (or ‘influencer’) with the same dystopian brush, it’s been clear for a while now that image edits, content stealing, and general clout chasing have become humans’ go-to tendencies when navigating across the online world. Further, with the introduction of tools which allow even more of this, detecting truly authentic content will become an increasingly harder task.

AI in Large Scale Social Media

In comparison to the world’s global issues, an influencer deciding to use AI-generated images to show off their ‘disappointingly rainy’ trip to the expectedly-sunny Algarve seems a rather trivial point of concern.

Of course, you’d not be wrong in thinking this, however again, with the instantaneous generative capabilities that we now have at our disposal, damage on a much grander scale can also be made through the use of AI.

One of the most intuitive cases in which AI generated images could be used wrongfully is in the context of scare mongering and propaganda. For example, an AI generated, photo-realistic image showing an explosion near the Pentagon began circulating online in recent days (with reports stating that it came from Russian ‘Z-channels’). Within hours the image had caught the attention of news reporters, where after being reported as real (or at least speculatively), it caused the S&P 500 to drop thirty points within minutes.

To add a little context into the scale in which confusion can be spread, an international military-focused Twitter page with over 336,000 followers was one of the verified pages to share the photo.

On the propaganda side of things, AI generated images can be used in abundance here. This is because the process of creation simply involves users typing-out a scenario in which looks bad/good for a particular party of interest. For example, earlier this year saw the release of AI-generated images which showed Donald Trump being tackled to the floor by dozens of police officers.

For his own PR sake, as well as his chances in the upcoming 2024 election, these fake images would’ve been damaging if seen by someone who’s none the wiser and/or too lazy to do more research into the situation. In addition, and regardless of your political stance, creating fake narratives about such a sensitive and controversial figure does no good for society.

Of course, such slanderous deformation can be felt by literally anyone who has at least one detractor – i.e. basically every public figure/entity in existence. Whilst this is bad enough on its own, things become worse when considering the lack of time and forethought in which many of today’s users have when it comes to uncovering the true authenticity of the content they’re exposed to. With these factors in mind – i.e. fictitious photorealistic images being exposed to non-the-wiser audiences – it doesn’t take a rocket scientist to see how AI-generated images can be a harmful tool for blurring the distinction between fiction and non-fiction.

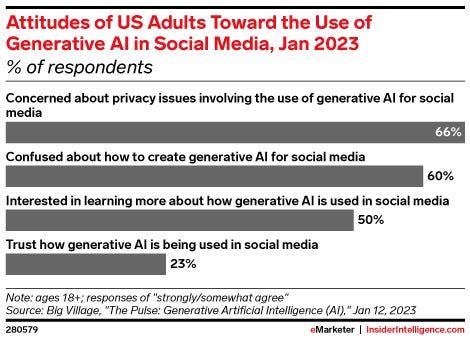

In fact, many of today’s social media users are already clued-up on such dangers – or so they like to say so – as a survey from Big Village in January of this year asked US adults what their attitude was towards ‘the Use of Generative AI in Social Media’. Here, the results – which were given in both ‘strongly’ and ‘somewhat agree’ answers – showed that a whopping 66% were ‘concerned about privacy issues involving the use of generative AI for social media,’ whilst 60% claimed to be ‘confused about how to create generative AI for social media’.

On the more optimistic side of things, 23% of participants said that they ‘trust how generative AI is being used in social media,’ whilst 50% said that they’re ‘interested in learning more about how generative AI is used in social media’.

Final Thoughts

When it comes down to it, the AI-generated illegitimacies of today all boil down to the concept of misinformation – which, if you look at the term’s fundamental premise, can even relate to the situation regarding the hypothetical influencer’s disappointing trip to the Algarve. Again, this is because AI tech and its generative capabilities have the ability to instantaneously create an infinitely diverse array of human-like creations.

Whilst images have been of main focus here, AI creations by no means stops at just this. As of May 2023, a flurry of new text-to-speech use cases have sprung up across social media, with such content including AI-generated songs from deceased artists such as Biggie Smalls, new tracks and covers from the likes of Kanye West, and comical conversations between world leaders.

Quite worryingly, the platforms behind such content – such as Love.ai, Speechify, and Murf etc. – already have ultra-realistic capabilities, which is why text-to-speech AI-generated content already have the potential be used unlawfully through users posting ‘seemingly legit’ evidence of a person saying something that they didn’t.

For many, Elon Musk’s controversial ‘Twitter Blue’ verification policies are adding even more fuel to the AI-driven slander fire, as the opportunities to impersonate public figures – be it celebrities, government officials, news websites etc. – are said to have increased tenfold.

However, quite astoundingly, we must’ve forgotten that all of such troubles come amidst AI’s first ‘proper’ year in the spotlight. For now, the space and its use cases seem as Wild West as the rug-pulling Web3 space, however, to add a little perspective, both areas of innovation are still in their very nascent days of development and pop-culture influence.

With this in mind, it’s perhaps a little hasty to reach a conclusion as to whether AI is a good thing for social media or not, as new – and perhaps incomprehensible – standards for protection and authentication may come to surface in years to come.

And on the flip side, we must remember that there is often a Ying and Yang to all things in life, which is why AI-generated content can also offer today’s social media goers with a whole host of positive opportunities.